ACES in 10 Minutes

/ACES in 10 Minutes

May 17, 2014

Lately I’ve been taking a hard look at the Academy Color Encoding System aka ACES and trying to wrap my head around it. There are a handful of decent white papers on the topic but they tend to be overly technical. Through pulling tidbits from multiple sources, one can come to a decent understanding of the how’s and why’s of ACES but I was hoping to find some kind of an overview; something that presented the “need to knows” in a logical and concise way and wouldn’t require a big time commitment to understand. I did not find such a resource so I’m writing it myself.

Conceptually, I love ACES. In practice, I don’t have a terrible amount of hands-on experience with it. What I do know is from my own research and extensive testing in Resolve. It's quite brilliantly conceived but it seems to be catching on rather slowly despite established standards and practices and a good track record. Moving forward, I think as the production community meanders farther and farther from HDTV, ACES will emerge as the most appropriate workflow.

The principal developer of ACES is the Academy of Motion Picture Arts and Sciences (AMPAS).

This description is vague but it seems to me that the Academy’s hope for ACES is to resolve two major problems.

1. To create a theoretically limitless container for seamless interchange of high resolution, high dynamic range, wide color gamut images regardless of source. ACES utilizes a file format that can encode the entire visible spectrum in 30 possible stops of dynamic range. Once transformed, all source material is described in this system in the exact same way.

2. To create a future-proof “Digital Source Master” format in which the archive is as good as the source. ACES utilizes a portable programming language specifically for color transforms called CTL (Color Transform Language). The idea is that any project mastered in ACES would never need to be re-mastered. As future distribution specifications emerge, a new color transform is written and applied to the ACES data to create the new deliverable.

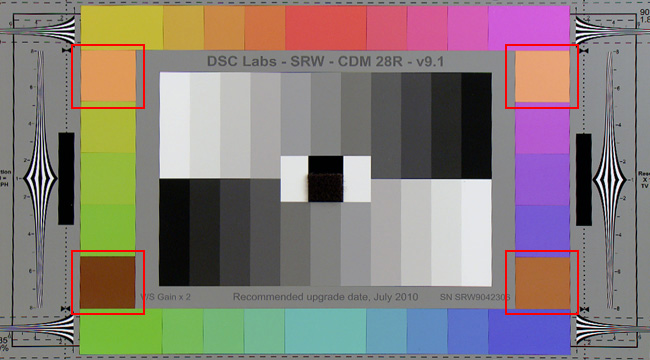

The key to understanding ACES is to acknowledge the difference between “scene referred” and “display referred” images.

A scene referred image is one whose light values are recorded as they existed at the camera focal plane before any kind of in-camera processing. These linear light values are directly proportional to the objective, physical light of the scene exposure. By extension, if an image is scene referred then the camera that captured it is little more than a photon measuring device.

A display referred image is one defined by how it will be displayed. Rec.709 for example is a display referred color space meaning, the contrast range of Rec.709 images is mapped to the contrast range of the display device, a HD television.

Beyond this key difference, there are several other new terms and acronyms used in ACES. These are the “need to knows”.

IDT: Input Device Transform:

Transforms source media into scene referred, linear light, ACES color space. Each camera type or imaging device requires its own IDT.

LMT, Look Modification Transform:

Once images are in ACES color space, the LMT provides a way to customize a starting point for color correction. The LMT doesn't change any image data whereas an actual grade works directly on the ACES pixels. An example of a LMT would be a "day for night" or "bleach bypass" look.

RRT, Reference Rendering Transform:

Controlled by the ACES Committee and intended to be the universal standard for transforming ACES images from their scene referred values to high dynamic range output referred values. The RRT is where the images are rendered but it is not optimized for any one output format so requires an ODT to ensure it's correct for the specific output target.

ODT, Output Device Transform:

Maps the high dynamic range output of the RRT to display referred values for specific color spaces and display devices. Each target type requires its own ODT. In order to view ACES images on a broadcast monitor for example, they must first go through the RRT and then the Rec.709 ODT.

The ACES RRT and ODT work together like a display or output LUT in a more conventional video workflow. For example when we use a Rec.709 3DLUT to monitor LogC images from an Arri Alexa. The combination of RRT and ODT is referred to as the ACES Viewing Transform. If you have ACES files, you will always need an ACES Viewing Transform to view them. An additional step to this would be to use an LMT to customize the ACES Viewing Transform for a unique look.

ACES Encoded OpenEXR:

Frame based file format used in ACES. It is scene referred, linear, RGB, 16 bit floating point (half precision) which allows 1024 steps per stop of exposure with up to 30 total stops dynamic range and a color gamut that exceeds human vision.

ACES Workflow utilizes a linear sequence of transforms to create a hybrid system that begins as scene referred and ends as display referred. It is a theoretically limitless space in which we work at lossless image quality (scene referred) but inevitably will need to squeeze into a smaller and more manageable space for viewing and/or delivery (display referred).

Any image that goes into ACES is first transformed to its scene referred, linear light values with an Input Device Transform or IDT. This IDT is specific to the camera that created the image and was written in ACES Color Transform Language by the manufacturer. This transform is extremely sophisticated and essentially deconstructs the source file, taking into account all the specifics of the capture medium, to return as close as possible to the original light of the scene exposure. Doing this allows multiple camera formats to be reduced to their basest, most universal state and allows access to every last bit of picture information available in the recording.

Big caveat - an ACES IDT requires viable picture information to do the transform so if it’s not there because of poor exposure then there's nothing that can be done to bring it back. Dynamic range cannot be extended and image quality lost through heavy compression cannot be restored. The old adage, “Garbage In, Garbage Out”, is just as true in ACES.

In ACES, scene referred images are represented as linear light which does not correspond to the way the human eye perceives light so are not practically viewable and must be transformed into some display referred format like Rec.709. This is done at the very end of the chain with the Output Device Transform, or ODT.

There are however a few more transforms that happen in-between the IDT and ODT, namely the LMT, Look Modification Transform, where grading and color correction happens and the RRT, Reference Rendering Transform, where the ACES scene referred values begin their transformation to a display / output format for viewing.

Because this process is very linear it should be fairly simple to explain in a diagram. Let’s try.

click to enlarge

I hope this overview is intuitive but in my desire to simplify, I easily could have overlooked important components. It's a very scientific topic and I'm coming at this from the practical viewpoint of a technician. I’m always open to feedback.

Anyone reading this who is interested in ACES I would encourage to join the ACES community forum at www.ACEScentral.com and read up on the latest developments and implementations of the project.

OFFICIAL ACES INFORMATION FROM THE ACADEMY OF MOTION PICTURE ARTS AND SCIENCES:

http://www.oscars.org/science-technology/sci-tech-projects/aces

http://www.oscars.org/science-technology/council/projects/pdf/ACESOverview.pdf

MORE RELATED ARTICLES:

http://www.finalcolor.com/acrobat/ACES_Nucoda%20r1_web.pdf

http://www.poynton.com/w/ACES/

http://www.studiodaily.com/2011/02/is-justifieds-new-workflow-the-future-of-cinematography/

http://www.fxguide.com/featured/the-art-of-digital-color/

http://simon.tindemans.eu/essays/scenereferredworkflow

This article was updated 9/4/14 with input from Jim Houston, Academy co-chair of the ACES project. It was updated again on 4/2/17 with input from Steve Tobenkin from ACES Central

© 2021 Bennett Cain / All Rights Reserved /

© 2021 Bennett Cain / All Rights Reserved /