Syncing Audio in Resolve 9 Lite

/Syncing Audio in Resolve 9 Lite

Happy Sunday. It's a beautiful one here in NY so can't wait to get on the bike. That said, this will be quick.

Whenever I spend an aggravating amount of time trying to figure something out, I feel that it's a worthy topic for a blog post. I've been checking out the Resolve 9 Lite Beta and couldn't figure out for the the life of me how to sync audio. Couldn't find any decent tutorials or workflow guides online or on the BMD forums. After enough "right clicking" I finally found it.

From the top -

2 Create your project and set it up according to your camera media specs.

3 In MEDIA, load your camera files along with the corresponding sound files. It helps to make a bin for both in the Media Pool window, like "Picture" and "Sound".

4 In CONFORM, create a new Timeline and call it something like SYNCED or you can use the Camera Roll Number. Whatever works for you really.

Now select the Bins for Audio and Video in the Media Pool and RIGHT CLICK on the Timeline you just created. Select "Link With Audio From Selected Bins". A prompt will come up confirming your selections. Click OK.

5 You can confirm that you now have sync sound on your video clips by scrubbing through the timeline.

For now there is no way to sync audio without synchronous timecode and no way to slip in the event of drift. That's a problem but one that I'm sure will be addressed. Another problem is that this only works with truly MOS video clips. If there are audio channels in the ProRes Files (or whatever), even if they don't actually contain any sound, Resolve will not overwrite these channels with new ones.

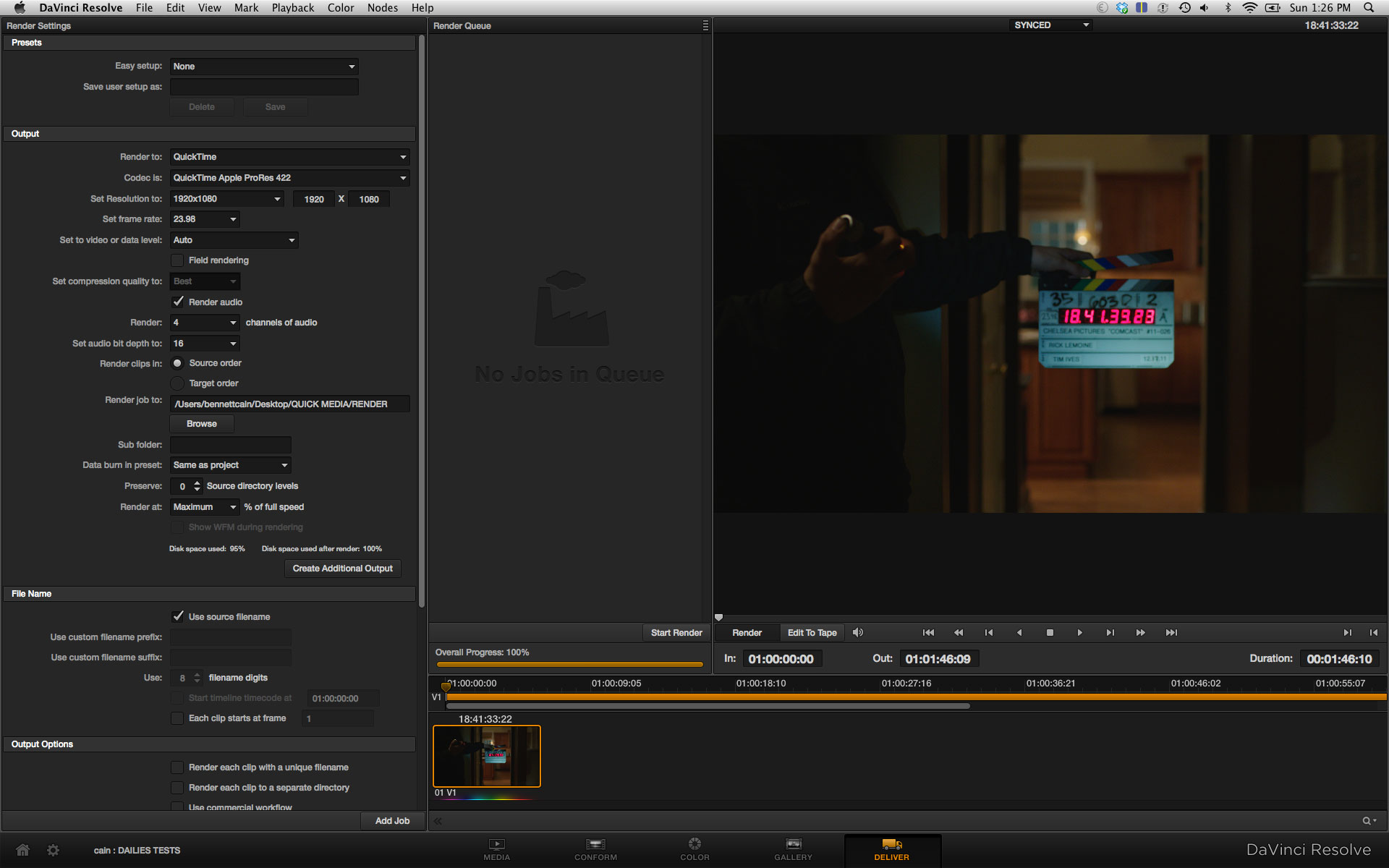

6 In DELIVER, make sure to select "Render Audio" in Output and select however many channels.

There you have it - Color Corrected, Sync Sound Dailies. For free software, this is a pretty powerful solution for HD deliverables. I'm not going to get into a bunch of software comparisons / pros and cons right this moment but I do think with Lite you get an awful lot of BANG for your "No Buck". Hard to argue with that.

© 2021 Bennett Cain / All Rights Reserved /

© 2021 Bennett Cain / All Rights Reserved /