NAB 2014 Post-Mortem

/NAB 2014 Post-Mortem

May 10, 2014

A NAB blog post one month after the show? Better late than never but this is pretty bad. So what’s left to say? Well in my opinion this year’s show was in a word, underwhelming. Among the countless new wares on display there was really only a handful that would stop you in your tracks with the freshness of their concept or utility. If my saying this comes off as waning enthusiasm then it might be true and I've been thinking a lot about why that is.

Not to point out the obvious but over the last 5 years monumental things have happened for the filmmaker. Within a very short span what was prohibitively expensive and difficult to achieve for 100 years of movie making became affordable and thus accessible to a whole new generation of artists. For the first time ever, anyone with a couple of grand could produce cinematic images and find an audience.

This was a two-fold revelation –

Manufacturing, imaging, and processing breakthroughs along with mobile technology facilitated high-quality, low-cost acquisition and postproduction and then through new social media avenues, a waiting pool of resources and viewers.

In 1979 on the set of Apocalypse Now, Francis Ford Coppola said,

This statement no doubt sounded ludicrous in 1979 but the sentiment of technology empowering art is a beautiful one.

Turns out he was right and it did happen, in a big way, and predictably these developments not only empowered artists but ignited an industry-wide paradigm shift. Over the course of the last decade, media has been on the course of democratization and it’s been a very exciting and optimistic time to be in the business. But here we are now in 2014, the dust has settled and the buzz has worn off a bit. It's back to business as usual but in our new paradigm, one defined by a media experience that's now digital from end to end and completely mobile. One where almost everyone is carrying around a camera in their pocket and being a “cameraperson” is a far more common occupation than ever before.

Because so much has happened in such a short time, it's now a lot harder for new technology to seize the public’s imagination like the first mass-produced, Raw recording digital cinema camera did. In the same vein, a full frame DSLR that shoots 24p video was a big deal. A sub $100k digital video camera with dynamic range rivaling film was a big deal. Giving away powerful color correction and finishing software for free was a big deal. I’m always looking for the next thing, the next catalyst, and with a few exceptions, I didn’t see much in this year’s NAB offerings. I predict more of the same in the immediate future – larger resolution, wider dynamic range, and ever smaller and cheaper cameras. This is no doubt wonderful for filmmakers and advances the state of the art but in my opinion, unlikely to be as impactful on the industry as my previous examples.

That said this is not an exhaustive NAB recap. Instead I just want to touch on a few exhibits that really grabbed me. New technology that will either -

A. Change the way camera / media professionals do their job.

B. Shows evidence of a new trend in the business or a significant evolution of a current one.

Or both.

Dolby Vision

Dolby's extension of their brand equity into digital imaging is a very smart move for them. We've been hearing a lot about it but what exactly is it? In 2007 Dolby Laboratories, Inc. bought Canadian company, BrightSide Technologies, integrated their processes and re-named it Dolby Vision.

It is a High Dynamic Range (HDR) image achieved through ultra-bright, RGB LED backlit LCD panels. Images for Dolby Vision require a different finishing process and a higher bandwidth television signal as it uses 12 bits per pixel instead of the standard 8 bits. This allows for an ultra wide gamut image at a contrast ratio greater than 100,000:1.

Display brightness is measured in “candelas per square meter”, cd/m2 or “nits,” in engineering parlance. Coming from a technician's point of view where I'm used to working at Studio Levels, meaning my displays measure 100 nits, when I heard Dolby Vision operates at 2000-4000 nits, it sounded completely insane to me.

For context, a range of average luminance levels –

Professional video monitor calibrated to Studio Level: 100 nits

Phone / mobile device, laptop screen: 200-300 nits

Typical movie theater screen: 40-60 nits

Home plasma TV: >200 nits

Home LCD TV: 200-400 nits

Home OLED TV: 100-300 nits

Current maximum Dolby Vision test: 20,000 nits

Center of 100 watt light bulb: 18,000 nits

Center of the unobstructed noontime sun: 1.6 billion nits

Starlight: >.0001 nit

After seeing the 2000 nit demo unit at Dolby’s booth, I now understand that display brightness at these high levels is the key to creating a whole new level of richness and contrast. It’s in fact quite a new visual experience and “normal” images at 100 nits seem quite muddy in comparison.

These demonstrations are just a taste of where this is going though. According to Dolby's research, most viewers want images that are 200 times brighter than today’s televisions. If this is the direction display technology is going then it is one that's ideal for taking advantage of the wide dynamic range of today's digital cinema cameras.

Because it poses a challenge to an existing paradigm, and even though there are serious hurdles, Dolby Vision is rich with potential so was for me the most interesting thing I saw this year's NAB show. It really got me thinking about what the ramifications would be for the cinematographer, camera and video technicians, and working on the set with displays this bright. It would require a whole new way of thinking about and evaluating relative brightness, contrast, and exposure. Not to mention that a 4000 nit monitor on the set could theoretically light the scene! This is a technology I will continue to watch with great interest.

Andra Motion Focus

Matt Allard of News Shooter wrote this excellent Q & A on the Andra >>>

Andra is interesting because it's essentially an alternative application of magnetic motion capture technology. Small sensors are worn under the actor's clothing, some variables are programmed into the system, and the Andra does the rest. The demonstration at their booth seemed to work quite well and it's an interesting application of existing, established technology. It does indeed have the potential to change way lenses are focused in production but I do have a few concerns that could potentially prevent it from being 100% functional on the set.

1. Size. It's pretty big for now. As the technology matures, it will no doubt get smaller.

Image from Jon Fauer's Film and Digital Times >>>

2. Control. Andra makes a FIZ handset for it called the ARC that looks a bit like Preston's version. It can also be controlled by an iPad but that to me seems impractical for most of the 1st AC's I know. In order for Andra to work, shifting between the systems automatic control and manual control with the handset would have to be completely seamless. If Auto Andra wasn't getting it, you would need to already be in the right place on the handset so that you can manually correct. It would have to be a perfectly smooth transition between auto and manual or I don't see this system being one that could replace current focus pulling methodology.

3. Setup time. Andra works being creating a 3D map of the space around the camera and this is done by setting sensors. A 30x30 space requires setting about 6 sensors apparently. Actors are also required to wear sensors. Knowing very well the speed at which things happen on the set and how difficult it can be for the AC's to get their marks, Andra's setup time would need to be very fast and easy. If it takes too long, it will quickly become an issue and then it's back to the old fashioned way - marks, an excellent sense of distance, and years of hard earned experience.

Arri UWZ 9.5-18mm Zoom Lens

We associate lens this wide with undesirable characteristics such as barrel distortion, architectural bowing, and chromatic aberrations around the corners and frame edges. Because Arri's new UWZ Lens exhibits none of these characteristics it offers a completely fresh perspective for wide angle images.

DaVinci Resolve 11

Now a fully functional Non-Linear Editor!

One potential scenario, imagine a world where all digital media could be reviewed, edited, fixed and enhanced, and then output for any deliverable in one single software. Imagine if said software was free and users at all levels and disciplines of production and post-production were using it. Just how much faster, easier, and cheaper would that make everything across the board from acquisition to delivery? Forget Blackmagic Design's cameras, Resolve is their flagship and what will guarantee them relevancy. It is the conduit through which future filmmakers will tell their stories.

Being a Digital Imaging Technician, I can't help but wonder though what will happen to on-set transcoding when perhaps in the near future, editors themselves are working in Resolve and are able to apply Lookup Tables and color correction to the native, high resolution media they're working with.

Sony

Sony always has one of the largest booths and the most impressive volume of quality new wares at NAB. Being an international corporation with significant resources spread out over multiple industries, I think they've done a surprisingly good job of investing in the right R&D and have pushed the state of the art of digital imaging forward. A serious criticism however is they do a very poor job of timing the updates on their product lines. Because of this many of us Sony users have lost a lot of money and found ourselves holding expensive product with greatly reduced value as little as a year after purchase. Other than that, Sony continues to make great stuff and I personally have found their customer service to be quite good over the years. I always enjoying catching up at the show with my Sony friends from their various outposts around the world.

Sony F55 Digital Camera

The one thing that Sony has really gotten right is the F55. Through tireless upgrades, it has become the Swiss Army Knife of digital cinema cameras. One quick counter point, after seeing F55 footage against F65 footage at Sony's 4k projection, I have to say that I prefer the F65's image a lot. It is smoother and more gentle, the mechanical shutter renders movement in a much more traditionally cinematic way. It's sad to see that camera so maligned as the emphasis is now very much on the F55. Sony is constantly improving this camera with major features coming such as ProRes and DNxHD codes, extended dynamic range with SLog 3, 4k slow motion photography, and more. Future modular hardware accessories allow the camera to be adapted for use in a variety of production environments.

Like the Shoulder-mount ENG Dock.

This looks like it would very comfortable to operate for those of use who came up with F900's on our shoulders.

While this wasn't a new announcement, another modular F55 accessory on display at the show was this Fiber Adapter for 4k Live Production which can carry a 3840x2160 UHDTV signal up to 2000 meters over SMPTE Fiber. If the future of digital motion picture cameras is modular, then I think Sony has embraced it entirely with the F55.

While F55 Firmware Version 4 doesn't offer as much as V3 did, 4k monitoring over HDMI 2.0 is a welcome addition as it's really the only practical solution at present. 4x 3G-SDI links poses serious problems and Sony is aware of this and has invested substantially in R&D for a 4k over IP - 10 gig ethernet solution.

While it's difficult to discern what you're actually looking at in the below image, the 4k SDI to IP conversion equipment was on display at the show.

If this technology could become small and portable enough that a Quad SDI to IP converter could live on the camera, your cable runs could be a single length of cheap Cat6 ethernet cable to the engineering station where it would get converted back to a SDI interface. This would solve the current on-set 4k monitoring conundrum. In the meantime, there really aren't a ton of options and Sony currently has only two 30" 4k monitors with 4x 3G-SDI interface that could conceivably be used on the set.

The PVM-X300 LCD which was announced last year and already has come down in price about 50%.

And the first 4k OLED, the Sony BVM-X300. While it's difficult to perceive 4k resolution on a display of this size, the image is gorgeous and will no doubt be the cadillac 4k professional monitors once it's out. Sony was being typically mum about the specifics so release date and price are currently unknown.

Sony BVM-X300 4k OLED Professional Monitor. I apologize for the terrible picture.

Sony A7s Digital Stills and 4k Video Camera

I'll briefly touch base on the Sony A7s as I'm an A7r owner and have absolutely fallen in love with the camera. To those interested in how these camera stack up, the Sony Alpha A7, A7r, and A7s are all full frame, mirrorless, e mount, and have identical bodies.

The A7 is 24.3 MP, 6000x4000 stills, ISO 100-25,600, body only is $1698.

The A7r is the A7 minus the optical low pass filter and higher resolution 36.4 MP, 7360x4912 stills, ISO 100-25,600, body only is $2298.

The A7s is 12.2 MP, 4240x2832 stills, ISO 50-409,600, body only price is $2498.

If anything, I think the A7s is indicative of an ever rising trend - small, relatively inexpensive cameras that shoot high resolution stills and video. I'm guessing that most future cameras after a certain price point will be "4k-apable". That doesn't mean I would choose to shoot motion pictures on a camera like this. When cameras this small are transformed into production mode, it requires too many unwieldy and cumbersome accessories. The shooter and/or camera department just ends up fighting with the equipment. I want to work with gear that facilitates doing your best work and in my experience with production, this is not repurposed photography equipment.

Interestingly enough though despite this, the A7s seems to be much more a 4k video camera than a 4k raw stills camera. On the sensor level, every pixel in its 4k array is read-out without pixel binning which allows it to output over HDMI 8 bit 4:2:2 YCbCr Uncompressed 3840x2160 video in different gammas including SLog 2. This also allows for incredibly improved sensor sensitivity with an ISO range from 50 to 409,600. The camera has quite a lot other video-necessary features such as timecode, picture profiles, and balanced XLR inputs with additional hardware. The A7s' internal video recording is HD only which means that 4k recording must be done with some sort of HDMI off-board recorder.

As is evidence from many wares at this year's show, if you can produce a small on-camera monitor then it might as well record a variety of video signals as well.

Enter the Atomos Shogun. Purpose built for cameras like the Sony A7s and at $1995, a very impressive feature set.

Hey what camera is that?

Shooting my movie with this setup doesn't sound fun but the Shogun with the A7s will definitely be a great option for filmmakers on micro budgets.

One cool and unexpected feature of shooting video on these Sony cameras with the Sony e-mount lenses (there aren't many choices just yet) is that autofocus works surprisingly well. I've been playing around with this using the A7r and the Vario-Tessar 24-70mm zoom shooting 1080 video. The lens focuses itself in this mode surprisingly well which is great for docu and DIY stuff. I have to say I'm not terribly impressed with this lens in general though.

Sony Vario-Tessar T* FE 24-70mm f/4 ZA OSS Lens

It's quite small, light, and the auto focus is good but F4 sucks. The bokeh is sharp and jagged instead of smooth and creamy and it doesn't render the space of the scene as nicely as the Canon L Series Zooms which is too bad. Images from this lens seem more spatially compressed than they should.

At Sony's booth I played around with their upcoming FE 70-200mm f/4.0 G OSS Lens on a A7s connected 4k to a PVM-X300 via HDMI. I was even less impressed with this lens not to mention quite a bit of CMOS wobble and skew coming out of the A7s. It wasn't the worst I've seen but definitely something to be aware of. This really should come as no surprise though for a camera in this class and even Sony's test footage seems to mindfully conceal it.

Pomfort's LiveGrade Pro v2

As a DIT, I'd be remiss if I didn't mention LiveGrade Pro.

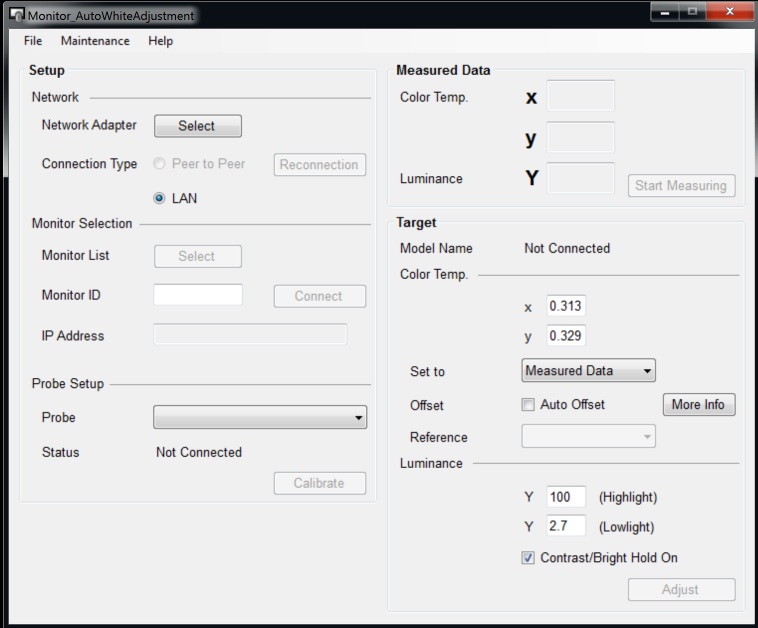

LiveGrade Pro is a powerful color management solution now with GUI options, a Color Temperature slider that affects RGB gains equally, stills grading, ACES, and support for multiple LUT display hardwares. Future features include a Tint Slider for the Green-Magenta axis nestled between Color Temp and Saturation. Right Patrick Renner? :)

Conclusion

So what's the next big epiphany? Is it this?

What is Jaunt? Cinematic Virtual Reality.

Jaunt and Oculus Rift were apparently at NAB this year and had a demo on the floor. This writer however, was unable to find it. My time was unfortunately very limited but other than Jaunt and the invite-only Dolby Vision demo, I'm feeling like I saw what I needed to see. What will be uncovered at next year's show? More of the same? Or a few things that are radically new and fresh?

© 2021 Bennett Cain / All Rights Reserved /

© 2021 Bennett Cain / All Rights Reserved /